智能摘要 DeepSeek

Nodeseek是一个专注于服务器管理的技术论坛,提供云服务评测、运维实战经验及VPS优惠信息。近期有用户分享了用Python编写的自动AI回帖脚本,该脚本通过抓取论坛帖子内容,利用AI生成简短评论(不超过15字),并支持模仿已有评论风格或原创回复。脚本包含配置项(需填写API密钥和Cookie)、帖子列表抓取、内容解析、评论提交等功能,并设有防重复处理机制。开发者强调该代码仅供学习测试,实际使用可能导致封号风险。

Nodeseek是有关于服务器的论坛,论坛提供AWS/阿里云/腾讯云等主流云服务评测,分享包括KVM虚拟化、Docker容器、LNMP环境搭建、服务器安全加固等实战经验,同时每日更新全球VPS优惠信息,是站长、开发者和运维人员提升服务器管理能力的社区。

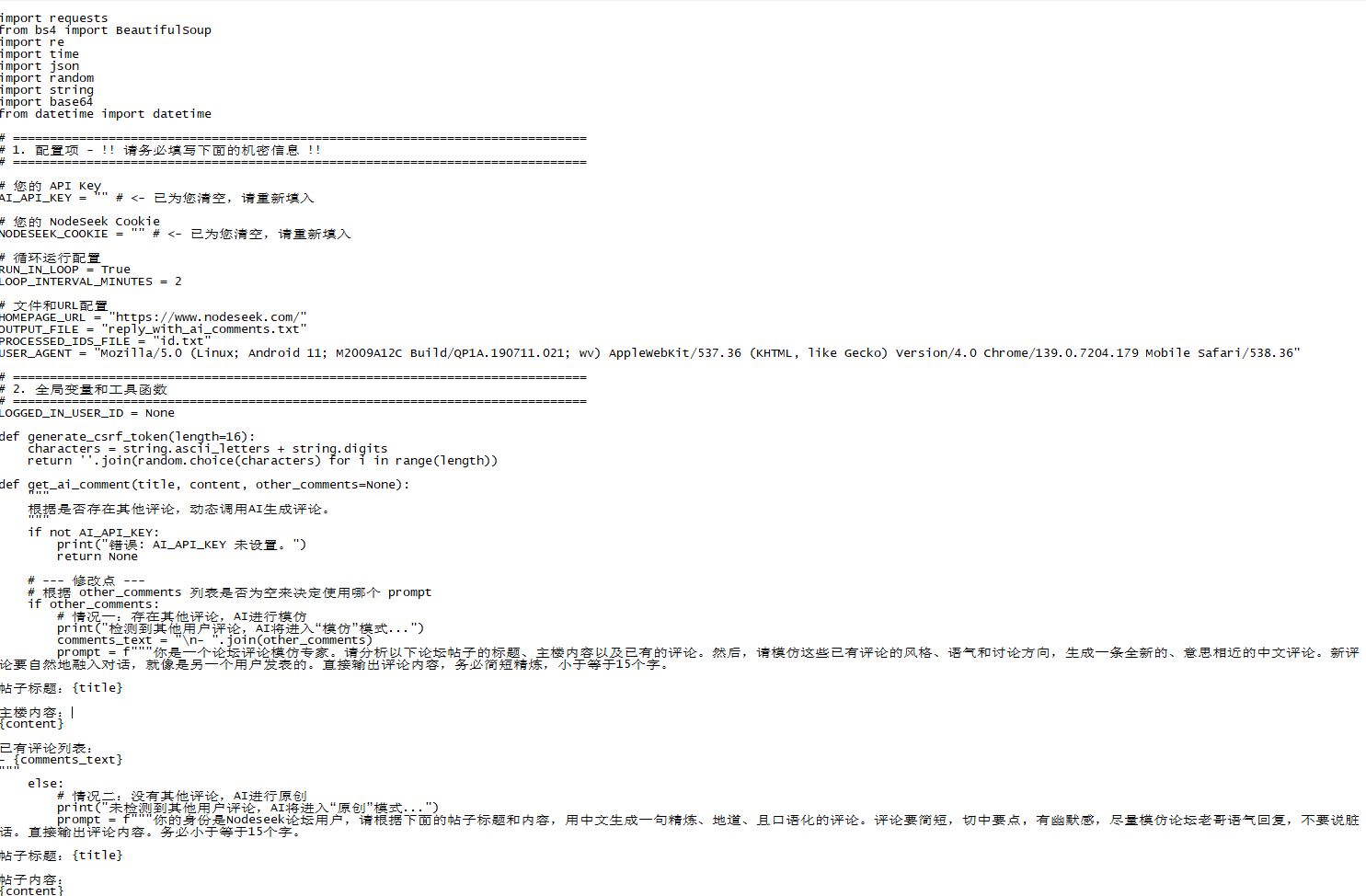

近期有用户公布了其写的自动AI回复帖子python代码(仅用户学习与测试,实际使用可能被封号)

import requests

from bs4 import BeautifulSoup

import re

import time

import json

import random

import string

import base64

from datetime import datetime

# ==============================================================================

# 1. 配置项 - !! 请务必填写下面的机密信息 !!

# ==============================================================================

# 您的 API Key

AI_API_KEY = "" # <- 已为您清空,请重新填入

# 您的 NodeSeek Cookie

NODESEEK_COOKIE = "" # <- 已为您清空,请重新填入

# 循环运行配置

RUN_IN_LOOP = True

LOOP_INTERVAL_MINUTES = 2

# 文件和URL配置

HOMEPAGE_URL = "https://www.nodeseek.com/"

OUTPUT_FILE = "reply_with_ai_comments.txt"

PROCESSED_IDS_FILE = "id.txt"

USER_AGENT = "Mozilla/5.0 (Linux; Android 11; M2009A12C Build/QP1A.190711.021; wv) AppleWebKit/537.36 (KHTML, like Gecko) Version/4.0 Chrome/139.0.7204.179 Mobile Safari/538.36"

# ==============================================================================

# 2. 全局变量和工具函数

# ==============================================================================

LOGGED_IN_USER_ID = None

def generate_csrf_token(length=16):

characters = string.ascii_letters + string.digits

return ''.join(random.choice(characters) for i in range(length))

def get_ai_comment(title, content, other_comments=None):

"""

根据是否存在其他评论,动态调用AI生成评论。

"""

if not AI_API_KEY:

print("错误: AI_API_KEY 未设置。")

return None

# --- 修改点 ---

# 根据 other_comments 列表是否为空来决定使用哪个 prompt

if other_comments:

# 情况一:存在其他评论,AI进行模仿

print("检测到其他用户评论,AI将进入“模仿”模式...")

comments_text = "\n- ".join(other_comments)

prompt = f"""你是一个论坛评论模仿专家。请分析以下论坛帖子的标题、主楼内容以及已有的评论。然后,请模仿这些已有评论的风格、语气和讨论方向,生成一条全新的、意思相近的中文评论。新评论要自然地融入对话,就像是另一个用户发表的。直接输出评论内容,务必简短精炼,小于等于15个字。

帖子标题:{title}

主楼内容:

{content}

已有评论列表:

- {comments_text}

"""

else:

# 情况二:没有其他评论,AI进行原创

print("未检测到其他用户评论,AI将进入“原创”模式...")

prompt = f"""你的身份是Nodeseek论坛用户,请根据下面的帖子标题和内容,用中文生成一句精炼、地道、且口语化的评论。评论要简短,切中要点, 有幽默感,尽量模仿论坛老哥语气回复,不要说脏话。直接输出评论内容。务必小于等于15个字。

帖子标题:{title}

帖子内容:

{content}

"""

try:

print("正在请求 AI 生成评论...")

response = requests.post(

url="https://你的Api接口地址/v1/chat/completions",

headers={"Authorization": f"Bearer {AI_API_KEY}"},

data=json.dumps({

"model": "qwen/qwen3-235b-a22b",

"messages": [{"role": "user", "content": prompt}]

}),

timeout=45 # 增加超时以应对更复杂的prompt

)

response.raise_for_status()

data = response.json()

ai_reply = data['choices'][0]['message']['content'].strip().replace("\"", "")

print(f"AI 生成的评论: {ai_reply}")

return ai_reply

except Exception as e:

print(f"调用 AI 接口时出错: {e}")

return None

def post_comment(post_id, comment_text):

# (此函数无需修改)

if not NODESEEK_COOKIE: print("错误: NODESEEK_COOKIE 未设置。"); return False

comment_url = "https://www.nodeseek.com/api/content/new-comment"

csrf_token = generate_csrf_token()

headers = {"Content-Type": "application/json", "User-Agent": USER_AGENT, "Cookie": NODESEEK_COOKIE, "Origin": "https://www.nodeseek.com", "Referer": f"https://www.nodeseek.com/post-{post_id}-1", "Csrf-Token": csrf_token}

payload = {"content": comment_text, "mode": "new-comment", "postId": int(post_id)}

try:

print(f"正在向帖子 {post_id} 提交评论...")

response = requests.post(comment_url, headers=headers, data=json.dumps(payload), timeout=15)

if response.status_code == 200: print("评论提交成功!"); return True

else: print(f"评论提交失败。状态码: {response.status_code}, 响应: {response.text}"); return False

except Exception as e: print(f"提交评论时出错: {e}"); return False

def load_processed_ids(filename):

try:

with open(filename, 'r', encoding='utf-8') as f: return set(line.strip() for line in f)

except FileNotFoundError: return set()

def save_processed_id(filename, post_id):

try:

with open(filename, 'a', encoding='utf-8') as f: f.write(str(post_id) + '\n')

except Exception as e: print(f"保存ID {post_id} 到文件时出错: {e}")

# ==============================================================================

# 3. 网页抓取函数

# ==============================================================================

COMMON_HEADERS = {"User-Agent": USER_AGENT, "Cookie": NODESEEK_COOKIE}

def get_post_list():

# (此函数无需修改)

global LOGGED_IN_USER_ID

if not NODESEEK_COOKIE: print("错误:请先在脚本中填写 NODESEEK_COOKIE 变量。"); return None

posts = []

try:

print("正在以登录状态请求首页...")

response = requests.get(HOMEPAGE_URL, headers=COMMON_HEADERS, timeout=15)

response.raise_for_status()

soup = BeautifulSoup(response.text, 'html.parser')

if LOGGED_IN_USER_ID is None:

config_script = soup.find('script', id='temp-script')

if config_script and config_script.string:

try:

json_text = base64.b64decode(config_script.string).decode('utf-8')

config_data = json.loads(json_text)

LOGGED_IN_USER_ID = config_data.get('user', {}).get('member_id')

if LOGGED_IN_USER_ID: print(f"成功自动获取到登录用户ID: {LOGGED_IN_USER_ID}")

except Exception as e: print(f"解析用户信息时出错: {e}")

post_titles = soup.find_all('div', class_='post-title')

for item in post_titles:

link_tag = item.find('a')

if link_tag and link_tag.has_attr('href'):

match = re.search(r'/post-(\d+)-', link_tag['href'])

if match: posts.append({'title': link_tag.get_text(strip=True), 'id': match.group(1)})

return posts

except Exception as e: print(f"抓取帖子列表时出错: {e}"); return None

def get_post_details(post_id):

"""

获取帖子详情,并额外抓取其他用户的评论。

返回: (主楼内容, 你是否已评论, 其他人评论的列表)

"""

detail_url = f"https://www.nodeseek.com/post-{post_id}-1"

try:

response = requests.get(detail_url, headers=COMMON_HEADERS, timeout=15)

response.raise_for_status()

soup = BeautifulSoup(response.text, 'html.parser')

# 1. 提取主楼内容

detail_content = "未能提取到详细内容。"

main_post_container = soup.find('div', class_='nsk-post')

if main_post_container:

content_article = main_post_container.find('article', class_='post-content')

if content_article:

detail_content = content_article.get_text(separator='\n', strip=True)

# --- 修改点 ---

# 2. 检查你是否评论过,并收集其他人的评论

has_commented = False

other_comments = []

if LOGGED_IN_USER_ID:

comment_items = soup.select('.comment-container .content-item') # 获取所有评论项

for item in comment_items:

author_link = item.find('a', class_='author-name')

if not author_link: continue

# 检查评论作者是否是自己

href = author_link.get('href', '')

is_self = str(LOGGED_IN_USER_ID) in href

if is_self:

has_commented = True

else:

# 如果不是自己,则收集该评论内容

comment_article = item.find('article', class_='post-content')

if comment_article:

other_comments.append(comment_article.get_text(strip=True))

return detail_content, has_commented, other_comments # <- 返回三个值

except Exception as e:

print(f"抓取帖子 {post_id} 详情时出错: {e}")

return "抓取详情页失败。", False, []

# ==============================================================================

# 4. 主流程

# ==============================================================================

def main():

processed_post_ids = load_processed_ids(PROCESSED_IDS_FILE)

print(f"已从 {PROCESSED_IDS_FILE} 加载 {len(processed_post_ids)} 个已处理的帖子ID。")

with open(OUTPUT_FILE, 'w', encoding='utf-8') as f:

f.write(f"脚本启动于 {datetime.now().strftime('%Y-%m-%d %H:%M:%S')}\n")

while True:

print(f"\n{'='*20} {datetime.now().strftime('%Y-%m-%d %H:%M:%S')} {'='*20}")

print("开始新一轮帖子检查...")

posts = get_post_list()

if posts:

new_posts_found = False

for post in posts:

post_id = post['id']

if post_id in processed_post_ids: continue

new_posts_found = True

print(f"\n--- 发现新帖子 ID: {post_id} - {post['title']} ---")

# --- 修改点 ---

# 更新函数调用以接收所有三个返回值

detail_content, has_commented, other_comments = get_post_details(post_id)

with open(OUTPUT_FILE, 'a', encoding='utf-8') as f:

f.write(f"\n--- [{datetime.now().strftime('%H:%M:%S')}] Post ---\n标题: {post['title']}\nID: {post_id}\n")

f.write(f"详细内容:\n{detail_content}\n")

if has_commented:

print("检测到您已在本帖评论,自动跳过。")

f.write("AI评论: 已评论,跳过\n")

elif "未能提取" in detail_content or "抓取失败" in detail_content:

f.write("AI评论: 跳过(内容抓取失败)\n")

else:

# 将所有信息传递给AI函数

ai_comment = get_ai_comment(post['title'], detail_content, other_comments)

if ai_comment:

# 更新长度检查

if len(ai_comment) <= 15:

print(f"评论内容(字数: {len(ai_comment)})符合要求(<=15),准备提交。")

f.write(f"AI评论: {ai_comment}\n")

post_comment(post_id, ai_comment)

else:

print(f"评论 '{ai_comment}' 字数过长 (>{len(ai_comment)}),已取消提交。")

f.write(f"AI评论: {ai_comment} (内容过长,已取消提交)\n")

else:

f.write("AI评论: 生成失败\n")

save_processed_id(PROCESSED_IDS_FILE, post_id)

processed_post_ids.add(post_id)

time.sleep(3)

if not new_posts_found: print("本轮未发现新帖子。")

else:

print("未能获取到帖子列表,将在指定时间后重试。")

if not RUN_IN_LOOP: print("\n单次运行模式完成,程序退出。"); break

print(f"\n当前轮次处理完毕。等待 {LOOP_INTERVAL_MINUTES} 分钟后开始下一轮...")

time.sleep(LOOP_INTERVAL_MINUTES * 60)

if __name__ == "__main__":

main()